“We want a real time data warehouse” said the IT Director.

“OK, so at the moment your doing a daily refresh of the warehouse and the cubes, right?” I replied.

“That’s right, but we have always wanted real time” was the reply.

“Well this is possible, but there would have to be a lot of investments made. First you would have to upgrade the disks from the 10K RPM that you have and have a combination of at least 15K RPM for the warm data and SSD’s for the hot data. Also, I think that the throughput on the backplane on the SAN is too slow, we need to get them…..”

“Hold on a minute!” interrupted the IT Director “I was hoping we can make use of the kit we have.”

“OK, but to get real time, we need to have the supporting hardware to meet that need, and if your not willing to make the investments, I can’t see how it could be achieved. Lets start again. What does real time really mean for your business….”

And after a couple of hours…

“OK, so you agree that at best, with the infrastructure we have in place, we are looking at a half day refresh of the cubes?” I conclude.

“Yes, but if you can sprinkle some of your magic and get that down, that would be great!”

Sound familiar?

Let’s consider another conversation

“We have a range of data source, name relational, but we do have some spreadsheets and XML files that we want to incorporate within the solution.” said the BI developer.”Oh, and Delia in procurement has an access database with information that we use as well”

“Sure, that wont be a problem. We can extract those files and place them in the staging area using SSIS.”

“We have video in the stores that record activity near the tills. We have always wanted to be able to store this data. At the moment they are on a tape and they are overwritten every week. We have had instances where we have had to review the videos from over a month old. Could we accommodate that?” States the Solution Architect.

“Its possible, but the impact of the size and nature of the data would mean that there would be a performance impact on the solution. Is it a mandatory requirement….”

Again. Does this sound familiar?

In many of the Business Intelligence projects I have undertaken, there is usually an area of compromise. The most common aspect is with real time or near real time data warehousing. The desire for this outcome is there. This is not a surprise as the velocity with which a business requires their information can impact the operational reports that can help to make decisions. But once there is a realisation of the capital and operational costs to implement a solution that is even near real time, the plans are abandoned due to complexity and cost, or the business will decide to pull “reports” from the OLTP system to meet their needs. Which we all know will place contention on the OLTP system.

The same decisions can also occur when it come to the types of data being sourced for a solution. Many project can handle the data that is required for a BI project, but increasingly, there is a thirst for a greater variety of data to become part of the overall solution. Traditional BI solutions can struggle to make chronological use of media files, and even at a simple level, other files types may be abandoned. And its not just the unstructured types of data that are not used. The sheer volume of the data sizes can be overwhelming for an organisations infrastructure.

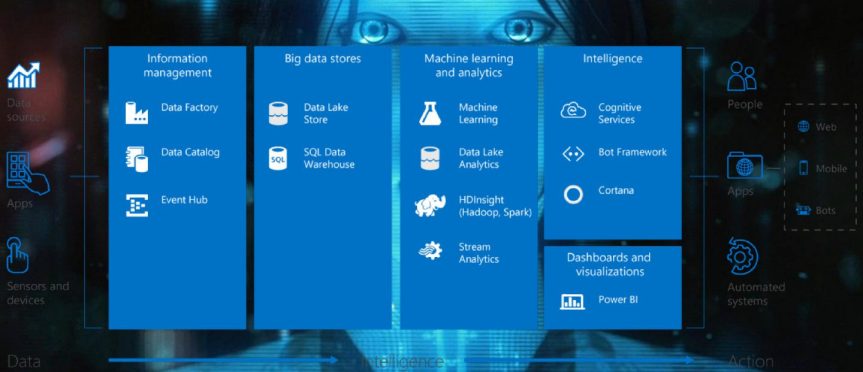

This is where the Cortana Intelligence Suite can help. Notice that the keys variables for compromise in the above scenarios involve speed, data types and size. Put another way, some potential blockers to solution adoption can be because the infrastructure cannot handle the velocity, variety and/or volume of the data. These are the common three tenants that define the characteristics of a Big Data solution. And the Cortana Intelligence Suite has been engineered to specifically accommodate these scenarios, whilst at the same time still providing the ability to work with relational data stores.

So which technologies can help in these scenarios

Velocity

A couple of options spring to mind. You could make use of the Azure Streaming Analytics service to deal with real time analytical scenarios. Stream Analytics processes ingested events in real-time, comparing multiple streams or comparing streams with historical values and models. It detects anomalies, transforms incoming data, triggers an alert when a specific error or condition appears in the stream, and displays this real-time data in your dashboard. Stream Analytics is integrated out-of-the-box with Azure Event Hubs to ingest millions of events per second. Event driven data could include sensor data from IoT devices.

A second option can include using Storm Cluster to process real time data. Storm is a distributed real-time computation system for processing large volumes of high-velocity data. Storm is extremely fast, with the ability to process over a million records per second per node on a cluster of modest size.

Volume

A range of technologies can be used to handle volume. Azure SQL Data Warehouse can provide storage for TB of relational data that can integrate with unstructured data using PolyBase.

HDInsight is an Apache Hadoop implementation that is globally distributed. It’s a service that allows you to easily build a Hadoop cluster in minutes when you need it, and tear it down after you run your MapReduce jobs to process the data. It can handle huge volumes of semi and unstructured data. There are variation of different types of Hadoop clusters. We have seen two examples here with Storm and HDInsight. I will write another blog about the differences in another post.

Variety

A number of the CIS technologies can handle a wide range of data types. For example you can use a CTAS statement to point to a semi structured file to be decomposed before storing in Azure Data Warehouse. Azure Data Factory can connect to a wide range of data types to be extracted. Azure Data Lake can act as repository for storing the data.

The Cortana Intelligence Suite provides a wide range of technologies that can deal with the barriers to complete BI solutions in the past. As the infrastructure is hosted in Azure, these blockers can now be removed with consideration primarily being given to the operational expenditure required to implement a solution, and less on the capital expenditure. So the IT Director may be more inclined to look into the potential of a real time solution.

So next time you are around a table talking about your latest BI project. Remember that the Cortana Intelligence Suite has the capabilities to deal with velocity, volume and variety, take a look at this interview I did in Holland for a summary.